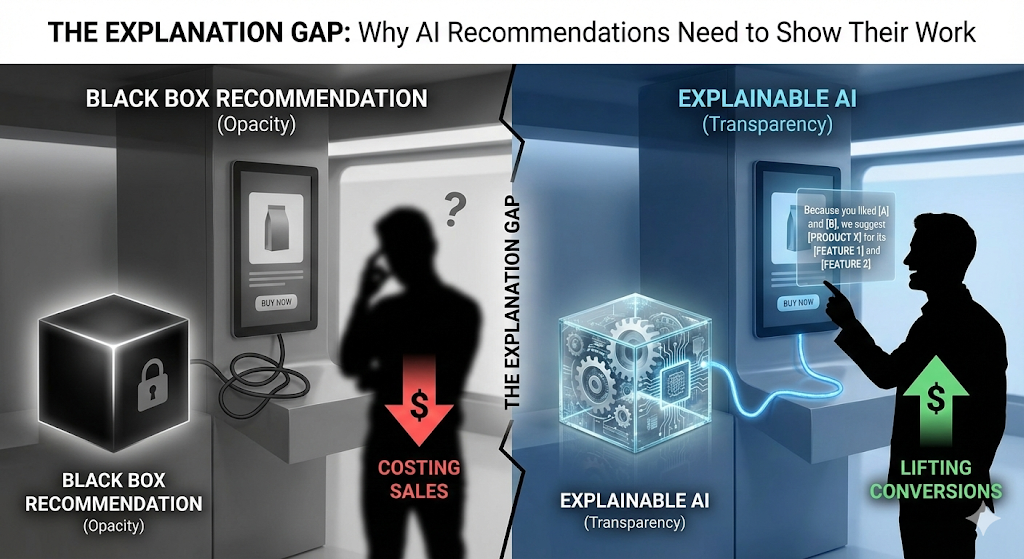

The Explanation Gap: Why AI Recommendations Need to Show Their Work

Opacity Is Costing You Sales

Black-box recommendations are costing you conversions. And soon, they'll cost you compliance.

When an AI agent suggests a product, most systems treat the logic like a trade secret. The user sees the recommendation. They don't see why.

That opacity isn't just bad UX. It's a measurable revenue leak and a growing regulatory liability.

A/B tests show explainable recommendations lift click-through rates by 3 to 10% and conversion by 3 to 15% over opaque systems.

Platforms that show their reasoning see return rates drop and customer satisfaction scores rise. Over 70% of consumers say they're more likely to trust and return to platforms that explain their logic.

The Shift Most Teams Are Missing

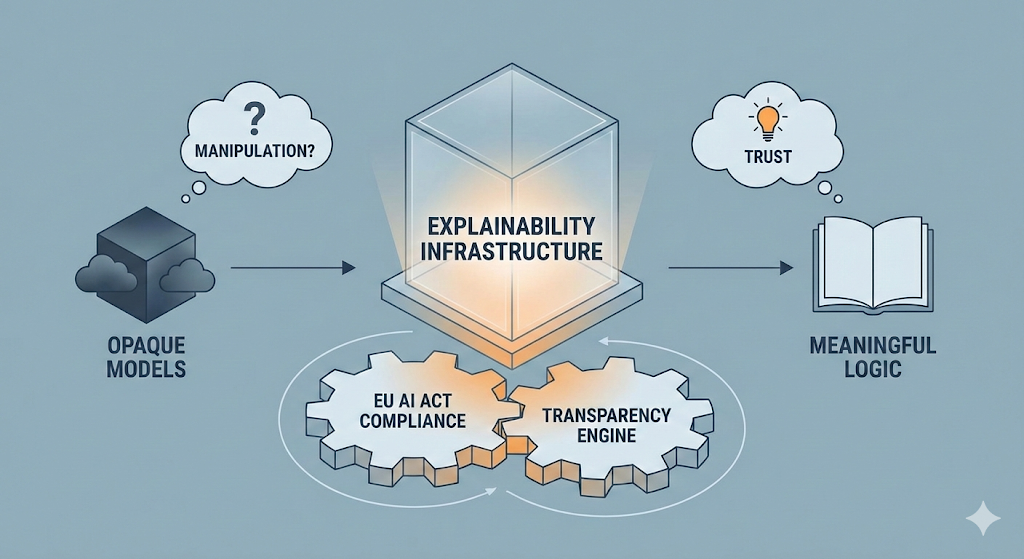

Explainability isn't a feature. It's infrastructure.

The EU's AI Act now requires "meaningful information about the logic, significance, and consequences" of automated recommendations.

That's not a suggestion. It's a compliance mandate with enforcement teeth.

If your recommendation engine can't surface its reasoning on demand, you're not just leaving conversion on the table. You're building technical debt that regulators will force you to unwind.

The problem is most AI systems weren't designed for transparency. Models trained on collaborative filtering or neural embeddings produce results that are statistically accurate but logically opaque.

The user sees a product. The system can't explain why.

Marketing calls it personalization. Users call it manipulation.

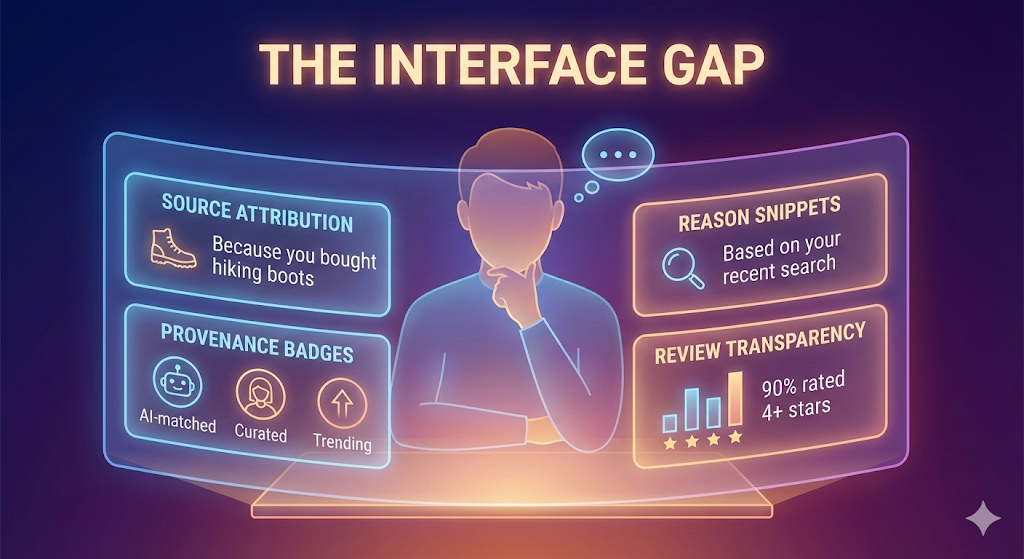

The Interface Gap, Not the Algorithm Gap

You don't need to expose the math. You need to surface the signal.

Source attribution.

"Because you bought hiking boots last month" or "Matches your wishlist preferences." Simple. Specific. Builds trust instantly.

Reason snippets.

"We're suggesting this based on your recent search for running shoes." Tie the recommendation to user behavior, not invisible magic.

Provenance badges.

"AI-matched," "Curated by experts," or "Trending in your category." Let users know where the signal came from.

Review transparency.

"90% of buyers like you rated it 4+ stars." Show aggregate sentiment, not just scores. Context matters.

"Why this?" links.

Prominent click-through that reveals criteria, confidence level, and opt-out options. Users who understand the logic are more likely to act on it.

What to Measure

CTR on explained vs. opaque recommendations.

A/B test transparent placements against black-box controls. The delta is your revenue gap.

Conversion rate by explainability.

Segment users who see reasoning vs. those who don't. Track lift.

Trust scores.

Pulse surveys asking if logic was clear. Five-star scale. Monthly cadence.

Return rates.

Explainable recommendations reduce post-purchase regret. Lower returns are a trailing indicator of better matching.

Compliance audit coverage.

Percentage of recommendations with proper disclosure and logic documentation. This becomes a legal requirement, not a nice-to-have.

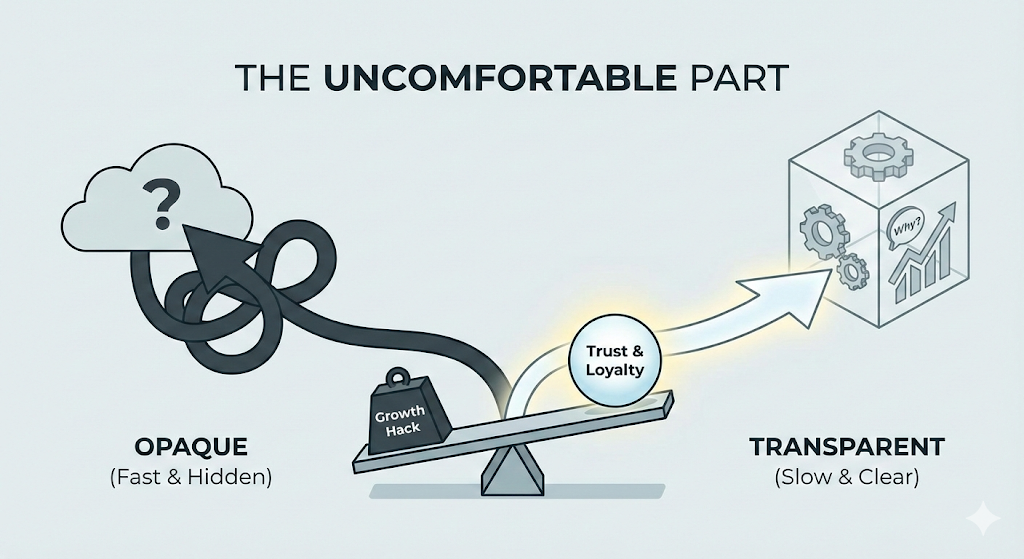

The Uncomfortable Part

Explainability slows down iteration.

Adding "why this?" links means maintaining metadata pipelines, auditing model outputs, and designing for transparency at the architecture level.

Teams that treat recommendations as a growth hack will resist the overhead.

But the math is clear. Transparent systems convert better, retain longer, and pass regulatory scrutiny.

Opaque systems optimize for short-term engagement at the cost of trust, compliance, and long-term revenue.

Streaming platforms with provenance and transparent logic report fewer complaints and higher loyalty. E-commerce brands testing explainable recommendations see 10 to 50% higher AOV in controlled experiments.

The evidence isn't ambiguous.

Start Simple, Scale Strategically

Your AI doesn't need to be smarter. It needs to show its work.

Users trust what they understand. Regulators enforce what they can audit.

If your recommendation engine can't explain itself, it's not ready for production.

Start with one UX pattern. Add source attribution to your top recommendation slot. Measure the lift.

Then build the infrastructure to scale it across every surface where AI touches the customer.

The explanation gap is a conversion gap. Close it before your competitors do.

.png)

.png)